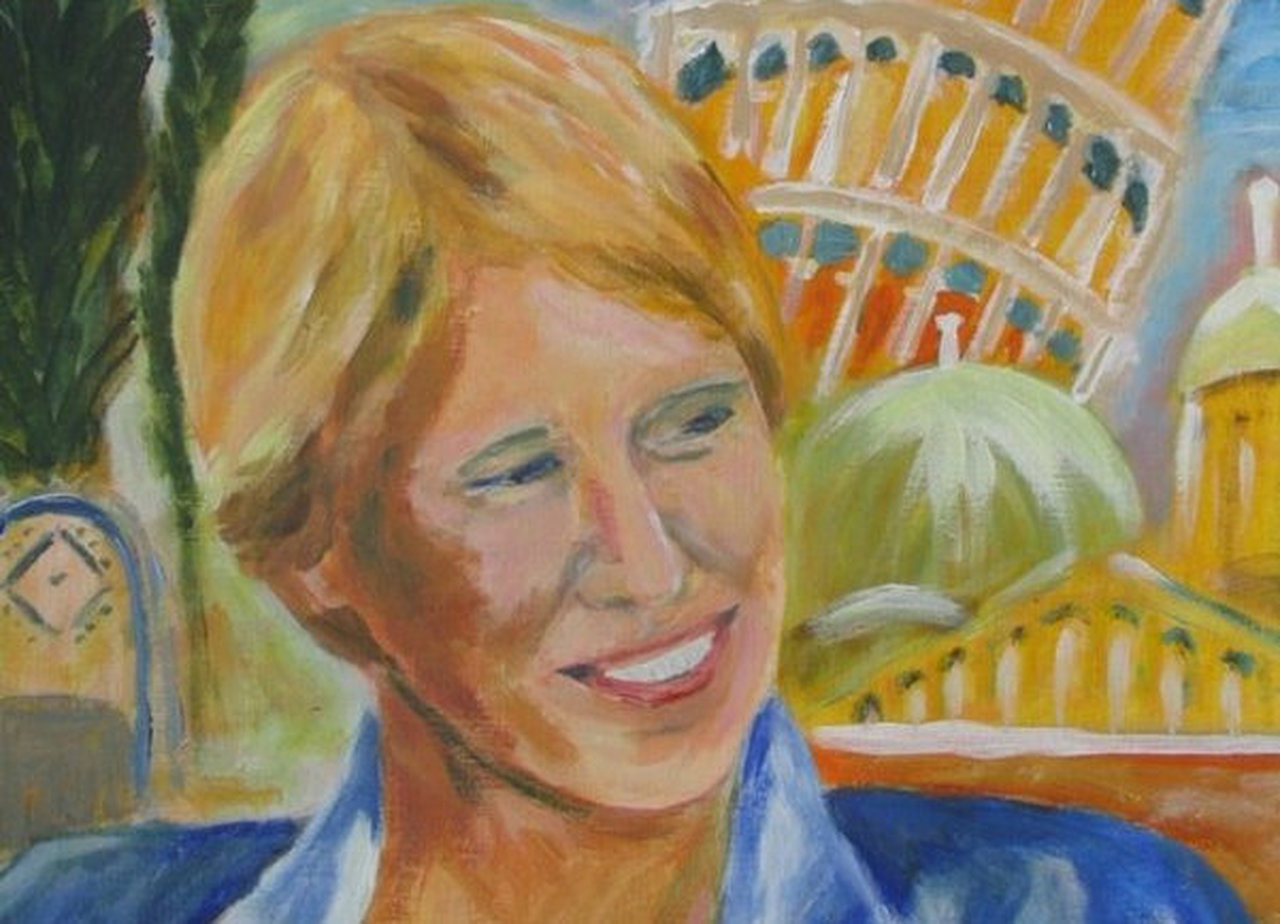

Model Checking Goes Spatial with Mieke Massink

by Martin Tappler — 23 January 2026

Topics: Interview

Mieke Massink is a Senior Researcher in Computer Science at the Formal Methods and Tools lab at CNR-ISTI (Institute of Information Science and Technology “A. Faedo”), a computer science research institute of the National Research Council of Italy (CNR). Prior to this appointment, she held several research positions at the University of Nijmegen, The University of Twente, both in the Netherlands, and, in the context of several European projects, at the CNR-CNUCE and CNR-ISTI institutes, Italy, and the University of York, United Kingdom. She is an invited tutorial speaker at ETAPS 2026.

How did you get interested in formal methods?

I have always been interested in methods that can help to develop correct software. Software is a strange kind of artefact. It may show non-linear behaviour, somewhat similar to that found in complex systems, in the sense that small mistakes in the design or in the code may have huge effects on its final behaviour, including effects on the safety of people or damage to things. I think the first time I became fully aware of that was when I came across an article by Bev Littlewood and Lorenzo Strigini on the Risks of Software that appeared in the Scientific American way back in 1992 [1].

Most errors are introduced during the design phase, and the later they are discovered, the more it costs to repair them. In the early nineties, when I started my PhD work, there turned out to be very few reliable methods to analyse software designs, especially for distributed and concurrent systems, but there was a lot of interesting research going on in that area, in particular in theorem proving and model checking for system design. For obvious reasons, that research focused on capturing the behavioural aspects of software models in a rigorous and unambiguous way, through the development of process calculi and process algebras to specify concurrent behaviour, and modal logics to express behavioural properties of interest. Time was modelled in many ways, ranging from discrete time to continuous time, hybrid forms of both, and stochastic time—you name it.

Your tutorial is about spatial model checking. Why is that?

It was not until we got interested in modelling Collective Adaptive Systems, some fifteen years ago, that we realised that also physical and logical space, besides time, could also be important dimensions to consider in models of software, in particular, in complex systems that consist not only of software components but also of the environment and humans. One interesting case study was that of bike sharing systems, in which people park their bikes in docking stations that are situated in various locations in a city, for example, near schools, shops, train stations, bus stops, and residential areas. The physical location had a clear impact on the attractiveness of those stations for hiring and returning bikes over the course of the day, and on options to improve the usability of those systems for users and reduce the number of kilometres vans have to travel to redistribute bikes.

This made us aware of the fact that in some systems, space could be as important as time, which is something that had been known for a long time in, for example, classical AI, where several spatial logics exist, perhaps the most well-known being the Region Connection Calculus (RCC) by Randell, Cui, and Cohn of 1992 [2]. Although RCC was used for reasoning about space, it had never been used in a model-checking setting. There was also a lot of theoretical work on spatial logics. Together with Diego Latella and Vincenzo Ciancia, as a small group of curious pioneers in the Formal Methods and Tools group in Pisa, and with Michele Loreti, we found that even Tarski and McKinsey, in the early forties of the previous century(!), had already given a spatial interpretation of modal logics based on topology. The Handbook of Spatial Logics by Aiello, Pratt-Hartmann, and van Benthem [3]—although not addressing spatial model checking—turned out to be a great source of inspiration for the development of the spatial model checker VoxLogicA and the spatio-temporal model checker topochecker.

For those of us who are not familiar with spatial logic, can you provide a simple example of a typical property that you can verify with spatial model checking?

Sure! Suppose you have an image of a maze, with walls in black, footpaths in white, and an exit in green. You might like to find the areas from which an exit can be reached, as well as those from which no exit can be reached. Another example could be an OpenStreetMap view with a station somewhere, and you would like to find all the larger streets surrounding the station. In a medical setting, you might like to contour lesions of the skin, such as a particular kind of nevi, regardless of the presence of other elements such as hair. In the spatio-temporal setting, such as that of bike-sharing systems, one could be interested in finding “clusters” of docking stations in a particular area that all become full and remain full over a certain period of time, making that area a critical one for those who need to park their bike and have to go to an appointment or catch a train.

How have you used these spatial model checkers so far?

As often happens in research, we started by investigating various case studies, mainly in the area of public transportation, when we happened, by chance, to end up speaking with people from the medical domain who were involved in analysing magnetic resonance images. Since model checking approaches are very general in nature, we teamed up with them to investigate applications in that domain as well, which turned out to be surprisingly successful. In this application domain, the logic mainly plays the role of a domain-specific spatial query language to find areas of, often critical, interest from a medical point of view. We have also started to investigate how a spatial model-checking approach could be used in tandem with sub-symbolic approaches based on deep learning. This would provide a protective-redundant way in which results are obtained in two completely different ways. If the results are sufficiently similar, then the result is likely to be more reliable, as the two methods are very different and unlikely to make the same mistakes on the same image. The longer-term aim is to obtain more transparent, reliable, and explainable forms of image analysis that could be compatible with medical guidelines for these forms of image analysis.

What are your next steps in this endeavour?

Well, there are so many application areas that it is difficult to say where we will be looking next. Certainly, we plan to continue its application in the medical field, as we feel that it can be really useful there. But there are many other fields where pixel-based images are used and where variants of SLCS could be used. By the way, SLCS stands for Spatial Logic for Closure Spaces, which are an extension of topological spaces. Let me just mention a recent next step that concerns 3D polyhedral images. These are more commonly encountered as triangular or tetrahedral meshes in computer graphics. Together with Gianluca Grilletti and our Georgian colleagues and experts on logics, Nick Bezhanishvili and David Gabelaia, we have developed a polyhedral semantics for SLCS and the related polyhedral model checker PolyLogicA.

For readers who may not be able to attend your tutorial, are there any papers or other resources you would recommend as an introduction to spatial model checking?

With pleasure! There are actually many, but let me mention two of the more recent ones. The first is an article introducing SLCS and the related spatial model checker for pixel- and voxel-based images, VoxLogicA. It also presents applications in the medical domain, in particular, the contouring of brain tumours and identifying white and grey matter [5]. A hands-on tutorial for VoxLogica can be found in [6]. The second article is concerned with polyhedral model checking and the PolyLogicA model checker that I just mentioned [7].

I have seen in your short bio that you are also concerned about the social responsibility of scientists. Is this a recent interest?

No, since I was a student in the eighties, I have always been interested in the social responsibility of researchers, and I decided to dedicate part of my research time to that topic. Research does not take place in a vacuum, nor is it neutral. It is actually often socially constructed, as already observed by Wiebe Bijker [4]. By this, I do not mean to say that science is not objective, but the directions in which research and technology develop are strongly influenced by sometimes complicated relationships between stakeholders in society.

From my student years onward, I have been involved in teaching courses on the social responsibility of researchers, first with Marc van Lieshout at the University of Nijmegen in the Netherlands, and later with Diego Latella, with the support of Dino Pedreschi, at the University of Pisa in Italy. Some of the main topics at that time were issues of privacy and the Strategic Defence Initiative, also known as Star Wars. This system was meant to protect the USA from attacks by nuclear missiles and was heavily based on safety-critical software.

Unfortunately, although since the early nineties the nuclear arms-race was reversed and the nuclear weapon stockpiles in the USA and the Soviet Union were reduced from roughly 30,000 each to about 4,000 each in 2025, these weapons have not been abolished completely and continue to be a serious threat to humanity and the world, in part due to the safety-critical software that is running the command and control systems [8], which is currently being modernised in many nuclear states. The Russell-Einstein Manifesto of July 1955 [9] remains a very relevant and inspiring document, reminding us of our responsibilities, and is worth reading by everyone, including computer scientists.

Computer science, in particular, has had tremendous societal impact, for example, through social media and more recently through AI. While initiatives like the International Scientific Report on the Safety of Advanced AI have been established, large tech corporations remain the most influential actors. In this context, what role can—and should—computer science researchers, especially those from formal methods, play in shaping the responsible development and deployment of technology?

Well, the challenges and possible consequences of a naive use of new AI products such as Large Language Models, Generative AI, and Actionable AI are huge. Your question is a very important one, which, unfortunately, has no simple answer since these products are so pervasive, in continuous evolution, and heavily funded by private actors with their own interests. Moreover, they have been released to society without any preparation, regulation, discussion, or warranty. In some sense, we are in the middle of an unexpected social experiment of enormous scale that is taking huge amounts of energy, financial resources, and human labour for further training of such systems. But let me try to focus on two of the many, many issues.

The first is the concern that it gets increasingly difficult and time-consuming for people to distinguish between fact and fiction. As Hannah Arendt already argued, such a situation may leave people susceptible to manipulation and less able to think for themselves, which in turn could facilitate the emergence of totalitarian regimes, as we have seen in the past. Even if not easy, a first step to help people to, at least, distinguish between products such as texts, music, and images generated by (sophisticated) machines and those produced by humans like themselves could be to make clear labelling obligatory, much like we require for advertising.

The second concern is more psychological in nature: our language, historically, was spoken and written only by our fellow humans and not by machines. Even people who are fully aware that LLMs are machines are shocked by how human-like they interact with us. People less aware may be even more susceptible to such an experience and assign it an unjustified feeling of empathy and trust purely based on the language LLMs use, lowering critical reflection even further. Here, I think, a first responsible step of researchers is active education, by raising public awareness, showing examples of such effects, and demanding much more responsibility from the main actors, including through the development of regulations concerning safety standards and certification. After all, the only ones who can be responsible for what happens in our society are we, humans, not machines.

[1] Bev Littlewood, Lorenzo Strigini, “The risks of Software”, Scientific American, Vol.267, no. 5, 1992, https://www.scientificamerican.com/article/the-risks-of-software/

[2] Randell, D.A.; Cui, Z; Cohn, A.G. (1992). “A spatial logic based on regions and connection”. 3rd Int. Conf. on Knowledge Representation and Reasoning. Morgan Kaufmann. pp. 165–176.

[3] Aiello, Pratt-Hartmann, and van Benthem, “Handbook of Spatial Logics”, 2007.

[4] Wiebe E. Bijker, Of Bicycles, Bakelites, and Bulbs: “Toward a Theory of Sociotechnical Change”, MIT Press, 1997.

[5] Gina Belmonte, Vincenzo Ciancia, and Mieke Massink. “Symbolic and Hybrid AI for Brain Tissue Segmentation Using Spatial Model Checking”. Artificial Intelligence in Medicine, 167, 2025. https://doi.org/10.1016/j.artmed.2025.103154

[6] Vincenzo Ciancia, Gina Belmonte, Diego Latella, and Mieke Massink. “A Hands-On Introduction to Spatial Model Checking using VoxLogica — Invited Contribution”, SPIN, 2021. https://doi.org/10.1007/978-3-030-84629-9_2

[7] Nick Bezhanishvili, Vincenzo Ciancia, David Gabelaia, Gianluca Grilletti, Diego Latella, and Mieke Massink. “Geometric Model Checking of Continuous Space”, Log. Methods in Computer Science, 18(4), 2022. https://doi.org/10.48550/arXiv.2105.06194

[8] Eric Schlosser, Command and Control, Penguin Press, 2013.

[9] Russell-Einstein Manifesto, July 1955, https://pugwash.org/1955/07/09/statement-manifesto/