A Lifetime of Algorithms

by Eduard Kamburjan — 28 January 2025

Topics: Interview

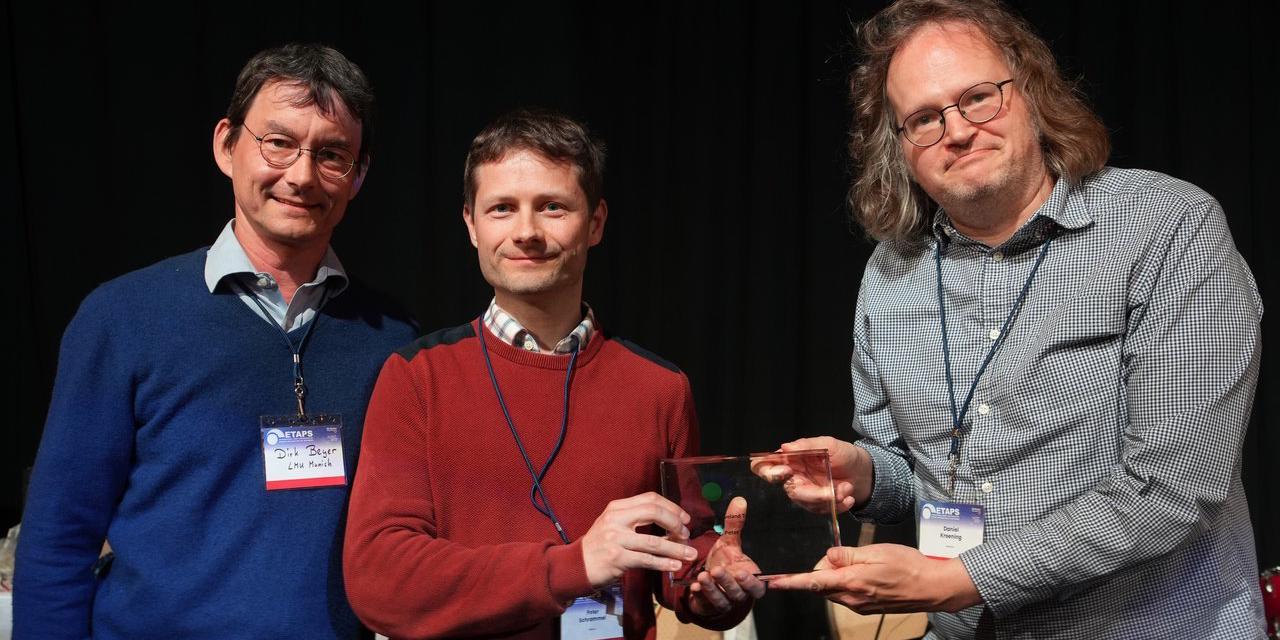

This interview is conducted on the occasion of awarding the ETAPS Lifetime Award to Prof. Dr. Bernhard Steffen.

Congratulations on your award. What are the things that you are most proud of?

As a co-founder of ETAPS, TACAS, STTT, and ISoLA, I am immensely proud of their success.

On the scientific side, reflecting on my research journey reveals some, in retrospect, interesting developments. After completing my PhD in program analysis, I joined the Concurrency Workbench project in Edinburgh, where model checking was a major focus. This led me to establish the connection between model checking and data flow analysis in the early 1990s. Initially intended to automate the generation of data flow analysis algorithms from temporal logic specifications, this connection revealed a deeper insight: In contrast to the traditional algorithmic specification in terms of how a certain dataflow properties can be computed, they simply specified the what, i.e., properties the dataflow analysis was meant to reveal.

These concise and compositional specifications enabled the incremental definition of complex properties using logical operators. Our optimal code motion algorithm not only solved a long-standing open problem but could also be elegantly verified through its logical structure.

Today, I see temporal logic as an ideal domain-specific language for this task. Our 1992 second-order model checking algorithm for context-free systems even supports inter-procedural analyses, forming an elegant program analysis framework implemented in our fixpoint analysis machine.

In practice, formal methods-based solutions often face limitations. During a large industrial project with Siemens Nixdorf on telecommunication systems, regression testing was a major concern. We employed active automata learning to create a new model-based testing approach, where models were automatically inferred during testing. This successfully reduced the regression test suite by a factor of five while improving test coverage.

Automata learning is now popular for inferring models for formal methods and can be combined with monitoring techniques for advanced runtime verification. Our LearnLib project, which won the CAV artifact award in 2025, is the leading framework for learning automata. It supports regular systems, register automata (RAs), extended state machines (ESMs) for data, and systems of procedural automata (SPAs) for context-free modeling structures.

Active automata learning is unique among formal methods-based techniques because it is neither correct nor complete. It provides the most concise hypothesis that explains a given set of observations, adhering to the principle of Occam’s razor. This distinguishes it from today’s popular AI-based methods, which typically lack such a foundational framework.

Is this still a field you are active in?

Yes, I continue to believe that active automata learning has the potential to revolutionize testing, though it takes longer than anticipated. Technological advancements are needed, such as richer model types than finite automata, monitoring-based learning, and handling long counterexamples. Practically, we must lower entry barriers by simplifying the definition of learning alphabets and modularizing testing efforts. Our “learnability by design” approach aims to address these challenges.

Is there something that you wish you would have done, but did not have the time or opportunity to do so?

I’ve long envisioned a revival of the Electronic Tool Integration platform (ETI), which was designed to combine and use the best features of the best formal methods tools. In the mid-90s, we had a corresponding fully service-oriented solution, a decade ahead of its time. We hoped that tool papers would include ETI implementations, but it was too labor-intensive back then. We integrated about 15 tools, but a quarter of a century ago, scaling this approach was unrealistic. Later, we attempted remote tool integration, which scaled better, but tool providers were reluctant due to primitive web interfaces lacking graphical support.

Today, we have advanced tool environments and language workbenches that enable collaborative graphical modeling: The time is ripe to renew efforts toward the original ETI goal. We are currently enhancing the Open-Source LearnLib as a prototype in this direction.

For people who are starting now, like PhD students or people who want to start a PhD, what advice would you give them?

We live in challenging times, with AI developments exposing the limits of our bounded rationality, not only in games like chess and Go but also in argumentation and even science. Understanding and managing these advancements is crucial. I believe that solid foundational knowledge, as provided by formal methods, is essential in this endeavor. Therefore, I strongly recommend exploring the potential of AI from the formal-methods perspective. This approach is intriguing for several reasons, even though we may never fully verify modern AI systems. We can aim for:

- Partial validation techniques,

- Analyzing the capabilities of AI systems, such as their ability to reason formally,

- Establishing new forms of AI/human interaction, similar to the increasingly popular pair programming with AIs, and even

- Gaining new insights into how our own brains might function.

We are just at the dawn of a new era, and it is exciting to be a part of it. This was the reason for me to found AISoLA in 2023.

Is there a paper or a book that you would recommend people to read?

“The Principles of Model Checking” by Christel Baier and Joost-Peter Katoen is an excellent resource. Additionally, staying informed about online material becomes more and more important. Finally, LNCS 15000 (LET’s Talk AI), which will appear soon, is an interesting collection of interviews concerning the future impact of AI. The corresponding project was initiated at AISoLA 2023, and it evolved to even provide quite remarkable interviews with two AIs, ChatGPT and Pi.