Competitions of Research Tools: Just for the Fun or Scientific Method?

by Marieke Huisman — 23 December 2024

Topics: Interview

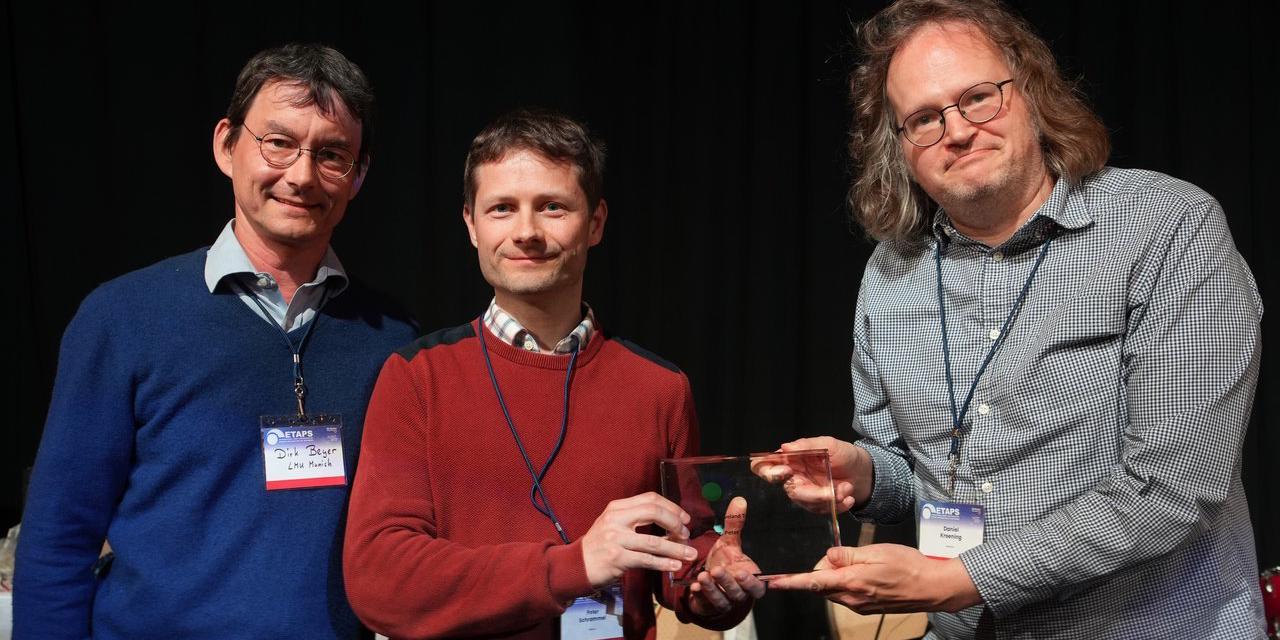

This blog post covers the topic of competitions, facilitating an interview of Marieke Huisman with the three organizers of competitions, Dirk Beyer, Arnd Hartmanns, and Fabrice Kordon. They give some insights on why they organize competitions and why they are important. They are editors of the recently published book on TOOLympics 2023.

Can you explain why competitions are important for our research community?

We use the name competitions to refer to regular comparative evaluations. Most competitions have defined a scoring schema, which translates quality of results to points, and then rank the participants, leading to a winner of the competition, which justifies the name competition. So there is a winner, a “best” participant. Although it is nice to win a competition, this is not the main point. Most teams participate to receive an objective evaluation of their tools, a comparison with the state of the art, to have their tool showcased, to practice reproducibility, to increase their visibility and recognition, to benefit from the publication opportunities, and to justify funding when applying for research grants. So there are lots of reasons to participate.

Comparative evaluation is an important scientific method to objectively evaluate research results and their applicability to solve concrete problems. Without the competitions, we would have only the comparisons that authors report in papers. Those evaluations by authors are often biased, namely, when authors decide how to use a tool (for example, setting their options) or which benchmark set of input problems to select. In competitions, there is a jury or organization team that sets up rules, the benchmark problems to work on, and the participants (often the developers) decide how to use the tool (for example, which options to set for an automatic tool, or how to interact with a theorem prover).

What is evaluated in the competition?

There are different flavors of competitions: Some competitions compare automatic tools in specific environments (for example, SAT-COMP, SMT-COMP, SV-COMP, Test-Comp, Model Checking Contest run experiments all on similar machines, with strict resource control), some others allow arbitrary machines and resources (e.g., RERS), and some compare tools and user teams together (e.g., VerifyThis) in order to evaluate also the effectiveness of the interaction between users and the tools.

In some competitions (e.g., Model Checking Contest), a comparison is done with the winner of the previous year, thus allowing organizers to show the progress over the years (for example, by comparing the “best” new tool with the best from the last year).

Do you see other benefits for such competitions?

Despite the fact that there is a common way to evaluate tools, competitions also collect and maintain benchmark sets that can be reused by a research community when doing individual experiments. When it comes to software competitions, it is also a way to define test suites, especially when the outcome of problems is known and validated by the competition. For example, SV-Benchmarks, the largest and most diverse benchmark set for verification tasks in the language C, is maintained by the community around the Competition on Software Verification (SV-COMP). It contains more than 30000 verification tasks.

Tool developers usually report that competitions have a huge impact on the code quality. Competitions are a learning opportunity: Participants are exposed to cutting-edge verification tools and techniques, and the participation in a competitive and prestigious event can inspire and motivate further contributions to the field.

Finally, competitions strengthen and federate the targeted communities, including non-participant colleagues. Results of the competitions are presented at international conferences and workshops, and these events bring together researchers to interact, collaborate, and share best practices. The competitions also encourage the development of standards, such as common input/output formats.

What are the differences between the various competitions?

First, every competition targets different situations and thus, uses different inputs, addressing their respective needs in the targeted application domain.

For example, in the Model Checking Contest, the input is a concurrent system model written as a Petri Net using the ISO/IEC exchange standard that was defined by this community. Numerous generated formulas to be evaluated are proposed using the “de facto standard” that is understood by several tools.

In other competitions, the inputs are software programs (SV-COMP), stochastic models (QCOMP), or specifications of computational problems that the participants have to solve (VerifyThis).

The tools are delivered as archives of executable software (SV-COMP, QCOMP), as virtual machines (Model Checking Contest), or are pre-installed on the laptops of participants.

For some competitions, computation is performed before the event where there are presented (like for SAT-COMP, SMT-COMP, SV-COMP, Model Checking Contest). In some others, the participants are expected to work on site to solve problems (like in RERS or VerifyThis).

Finally, what are the goals of TOOLympics?

TOOLympics is a collection of competitions, which takes place every four to five years. The first TOOLympics took place in Vienna, as part of FLoC 2014, the second in Prague as part of TACAS 2019 at its 25th anniversary. The most recent edition took place in Paris as part of ETAPS 2023. We plan to organize the next TOOLympics in 2027, again as part of ETAPS.

The goal is to let competitions organizers exchange about their practices, their problems, and associated solutions. It is also a forum where a multi-year vision of such competitions can be discussed. It is also a place where participants of the different competitions can meet and discuss together.

If you want to read more, we would like to invite you to the following reading list, which covers some of the events mentioned in the text:

- TOOLympics 2023: https://doi.org/10.1007/978-3-031-67695-6

- TOOLympics 2019: https://doi.org/10.1007/978-3-030-17502-3

- MCC 2018-2023: https://doi.org/10.1007/978-3-031-67695-6_3

- RERS 2021: https://doi.org/10.1007/s10009-021-00617-z

- SV-COMP 2024: https://doi.org/10.1007/978-3-031-57256-2_15

- Test-Comp 2023: https://doi.org/10.1007/978-3-031-30826-0_17

- VerifyThis 2023: https://doi.org/10.1007/978-3-031-67695-6_5