Courage in Research

by Georgiana Caltais — 1 August 2024

Topics: Interview, Award, Test-of-Time Award

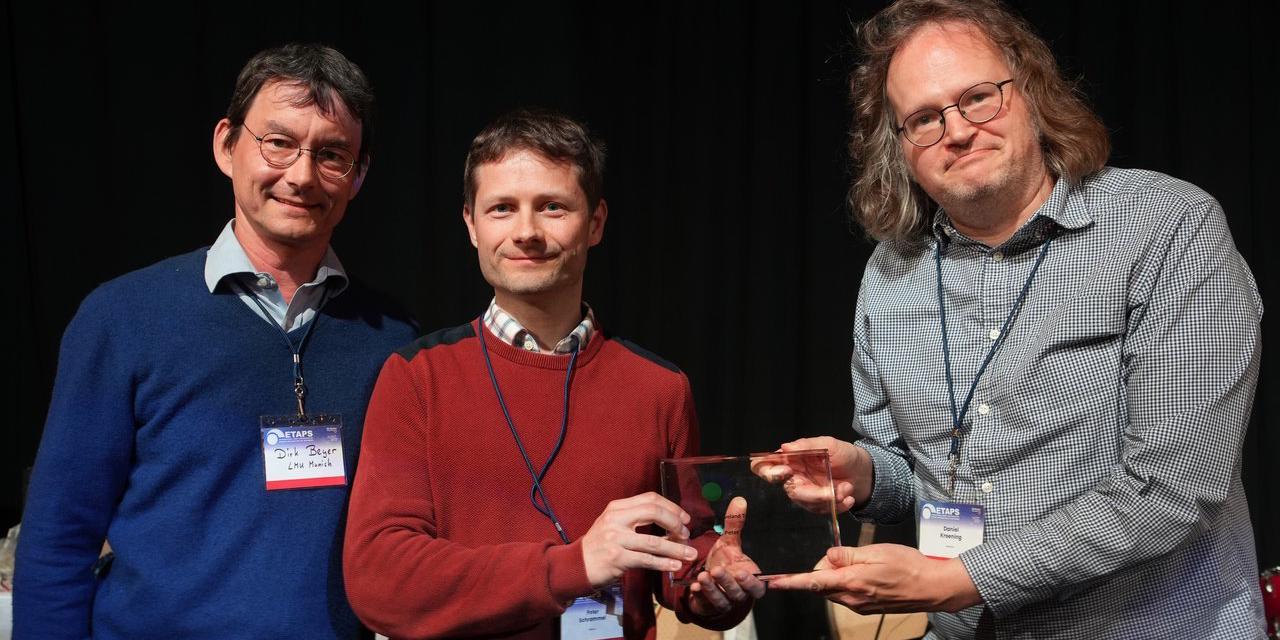

Luca Cardelli and Andrew D. Gordon have won the ETAPS 2024 Test-of-Time Award for their work on Mobile Ambients, published at FoSSaCS 1998. In the paper, they introduced a novel, elegant and highly expressive formalism, called the ambient calculus, which provided a unifying framework for modelling a full spectrum of systems with mobility, covering both mobile computing (computation carried out in mobile devices in networks with a dynamic topology) and mobile computation (executable code that is capable of moving around the network). What is their view on research nowadays?

Could you tell us a bit about yourselves, your research field, and current work?

Luca: My research has changed over the years. I started with trying to reduce a problem in programming languages or in systems to lambda calculus (we now call it lambda reductionism), then finding a solution there, and then bringing the solution back into the application domain. And that was working well for a long while. But, afterwards, we started running into trouble, especially because of concurrency. At that point Robin Milner and Tony Hoare showed us that you don’t have to be a lambda reductionist; you can invent another foundational calculus and do the same kind of activity in that calculus. That was the beginning of my concurrency phase. Now we had this foundation that was different from lambda calculus, and we had process algebras. Then I worked with Martín Abadi on object-oriented languages, trying to reduce them to lambda calculus. That was hard especially because of the type theory. But now we had this process algebra, and we decided to invent a new calculus, different from lambda calculus. So, we invented this object calculus, and we had to change our foundation to something suitable. Then, later, with Andy, this led to inventing an algebra for mobility. So, that was the story of my transition, in some sense.

Now my foundational calculus is chemical reactions. Chemistry was not originally computational science. It was phenomenology: look at nature, see how reactions work in nature and write it down. But there was no engineering activity. Now we can use chemistry as a computational framework. And this is what I like to do now. I work mostly in chemical reaction networks and DNA computing. Some questions are: What kind of algorithms can you write in chemical reactions, and what kind of programs can you write chemically? What can you do with DNA, and how can you program it? For example, how can you compute the first time-derivative of a continuous signal using chemical reactions? This is a problem that is actually unsolvable, but you want to get closer to a solution.

Andy: The last six months I’ve been working at Cogna, a software synthesis company in London. We are trying to figure out how to help end users to solve their productivity problems and their business problems, by exploiting the latest AI—the large language models. I actually think this is the state of the art of programming language research. Over the next five years there’s going to be quite a shift. I believe people will be writing less and less code, and professional engineers will spend a lot more time reviewing code generated by large language models: either completely fresh code, or changes such as bug fixes to existing code. That’s a general trend in the industry, brought on by code generating models like ChatGPT or Gemini.

There’s a whole range of companies that produce these foundation models, and the kind of research that we’re doing at Cogna, and the product we’re building, is really aimed at end users, not professional engineers. We want to help end users describe in words or by diagrams, for instance, what their current business problems are. Then, we’ll co-design with them—using AI—hyper-customised pieces of software that will solve those problems. I think this is a great growth opportunity for programming languages. We’re really going to enable end users who don’t think about themselves as programmers, to actually program using words and diagrams. Behind the scenes, we’ll generate the code that will solve those problems.

Do you believe that the current trend of large language models will stand the test of time?

Andy: It’s so hard to predict where it will be in 25 years’ time. I don’t think back in ‘98 we felt like 25 years later we would get this accolade, and it’s fantastic. I think in five years people will look back and be amazed at the progress that’s been made in being able to generate code. I think there’ll be a big shift as I say towards people spending a lot more time comprehending and approving generations from language models than they’ll spend writing fresh code. And many more people will be able to participate in this.

Luca: The fact that you can take a set of features as a vector, embed it into a vector space, in a very high dimensional vector space define the distance between the vectors, and then something completely magical happens: this distance conveys meaning—that’s mind-blowing. And I think we still don’t understand how that works. We need to make more progress on understanding why that works so well. I don’t think the AI people know a lot about it. We have one architecture now which is the “transformer” and that works extremely well. The question is what are the other architectures that can help us move forward? Presumably the brain uses different algorithms, which we don’t know about yet. There will be progress in that direction, which will probably involve more revolutions.

The recent progress of large language models is amazing, and at times it can feel scary. If large language models become so clever that they can answer any question, then many research fields and questions may become obsolete. What is your feeling about this?

Andy: I think many people’s work, including machine learning specialists, has been disrupted by what these large language models can do, over the past few years. Some people in natural language processing, for instance, found that all the methods that they worked on were made obsolete. I think a lot of people had a deep crisis, but I think they’ve built on their knowledge of the problem space. And if you understand a particular problem space, that’s deeply worthwhile to a researcher. Even if your own methods suddenly are made obsolete, you still know the problem. So, I think these researchers then started asking, well, alright, let’s look in a more nuanced way at how well large language models actually operate. And so they get inspired about new directions to follow.

I think we should all take inspiration from that and be courageous about what research we can do. It would be a mistake to ignore AI and to think it doesn’t apply. Take formal methods. There have been really exciting works on using AI to generate proofs. I see that in the Lean community, or Isabelle. My PhD supervisor was Larry Paulson, the inventor of Isabelle, and his research group has started to use large language models to generate proofs in Isabelle. I think Larry has done some legendary stuff in terms of bringing automation into interactive theorem proving, making it easier to generate proofs. I think that some of the work in his group on using large language models also to generate proofs kind of takes that a step further. Similar things are happening in the Lean world as well, which I think is just very exciting.

Going back to your work that stood the test of time, could you tell us about the story behind this line of research, and the actual contribution?

Luca: What we wanted to capture when we started, was the notion of mobile computing—both in the sense of active software moving across devices while running, and also in the sense of active hardware moving. Examples are cell phones moving across boundaries like rooms and airports. We wanted a unified framework to talk about all those things that were happening together. At that time there were Java applets that just came out, and those are some kind of mobile software. There were cell phones, they were starting to become very popular. There were lots of security issues. Company firewalls were being broken into. At the Digital Equipment Corporation I implemented a language which had code moving across computers in our local area network. We wanted to generalise that to wide area networks. So all that was in the background, I don’t remember much about how we started collaborating. Maybe Andy remembers more.

Andy: I remember quite well. As context, let me say a bit about my background, about what led me to all this. Robin Milner was my first ever lecturer at university up in Edinburgh. He taught his theory that Luca mentioned, called Calculus of Communicating Systems (CCS). That was one of my final year courses, which I loved very much. I also loved ML (Meta Language), the language that he was one of the leaders in. And so I decided to do a PhD in functional programming and decided to come to Cambridge to work with people here. And my PhD was about trying to bridge between ML and CCS. I was wanting to have a PhD called “Functional Programming and Concurrency” where I was going to show how to develop a theory of concurrency within a functional programming language. But that turned out to be too ambitious, and so it ended up simply being “Functional Programming and Input/Output”. Even that was like a big step forward back then: to figure out a theory of functional programming that could also accommodate input/output. At the time Haskell was being developed, and the Haskell community was trying to figure out how to do input/output in the setting of a pure functional language. The input/output effects are not naturally part of functional programming. There are a number of ways to encode it, and I worked on understanding that.

I got very interested as a postdoc in making use of the subsequent theory of the pi-calculus that Robin and others came up with. I had done my PhD in an office that had systems researchers who were very interested in cryptographic protocols, and I used to chat to them. We used to argue, as PhD students do, about how to theorise about cryptographic protocols. During my PhD, I never figured out how to do that, but then I started working with Martín Abadi, and we had this idea of using Robin’s pi calculus or aspects of it to model security. The restriction operator in the pi calculus is a lovely way to represent a secret that is freshly generated. And we realised that we could represent things like secret keys and nonces—fresh random numbers used by cryptographic protocols—using restriction. That led us to invent a new formalism called the spi calculus, which was a variant of the pi calculus oriented towards security.

So in the summer of ‘96, I was working with Martín on spi in California, and I had this holiday in Mexico, then came back to the US to upstate New York. There was this venue, called Lake Mohonk Mountain House. Luca and I were at this meeting—it was a functional programming workshop called IFIP Working Group 2.8. Luca had been thinking about a security model based on passports where you do gatekeeping, when you enter a country where you show a passport, a sort of capability to get in. But once you’re inside the country, you’re free to move around. So he was thinking about how to formalise that kind of security model in something like the pi calculus. And I’d been doing something similar for cryptographic protocols, so we had a conversation. I started scribbling on a bit of paper, and you know, the collaboration started. It was a ton of fun. I lived in Cambridge, and Luca was living in Palo Alto, CA. So I remember we had a ton of emails, but I don’t know where they are now. They’ve vanished, probably. But we had a ton of emails developing the theory of this calculus. It was a very remote collaboration for quite a long time, before we finished the paper.

Are there any subsequent developments or extensions that you find particularly interesting that have emerged from your work on mobile ambients over the last 30 years?

Luca: I was contacted by a biologist, who was a student of a colleague. The name is Aviv Regev—she’s currently the head of Genentech Research. They basically wanted to use the ambient calculus in a biological context. So we worked on these things that became bio-ambients. It was part of her thesis. That’s an ambient calculus framework with a stochastic semantics and a chemical simulation algorithm. That was totally unexpected. They contacted me, and that’s what got me into trying to learn biology because I had to talk to these biologists. And they’ve basically changed my career path at that point because I got interested in biology and I started working more in that direction. So that was my main event after ambient calculus. Afterwards I started working on what I called the brane calculi. These are like ambient calculi, but instead of having the operators inside the environment, the operators are on the boundary. And that’s more like cells: they have proteins on the membranes. That, in turn, got me into touch with other people. There’s a whole long tradition of people working on membrane computing. I got in touch with them because we now had this kind of competing approaches. We got together, and we started collaborating a little bit, and then converged on this kind of ambient-like formalism. Then I got deeper and deeper into DNA computing and chemistry. Microsoft developed a big set of tools on biochemical simulation under Andrew Phillips, with whom we initially tried to implement stochastic ambient calculus, but we never made it. Andrew is now at Sanofi working on biological modelling.

Andy: In terms of impact, there was an amazing range of lines of research. People were directly following up the original paper. We had a series of papers about mobile ambients, the basic untyped calculus. And then we explored a range of type systems where I personally learned a lot about how to develop type systems and logic as well. And equational reasoning building on some of the theory of behavioural equivalences from the pi calculus. There were lots of other colleagues who maybe knew some of these topics even better than us, who followed up. It was really gratifying, just to see the kind of results that people could get about the ambient calculus. And they also looked at various variations of the calculus. We had this open operator that was criticised as being a bit reckless because it would open up an ambient and its contents would spill out into the original enclosing ambient. In some sense, you had no idea what was going to spill out. So, you had to be careful about how to program with that operator. But others figured out ways or alternatives to that, which was very interesting.

In terms of the impact it had on me—I didn’t go the route of biology. I think Luca has been reading Scientific American since he was a teenager and knew a lot about biology, certainly way more than I did. So, I didn’t feel able to go in that direction. But it definitely influenced some of the following research I did in the security area. With a colleague, Cédric Fournet, we had a paper about what’s called stack inspection mechanisms in runtimes like Java or .NET. We developed a theory of compartments there to model what was going on, which was influenced by the ambient calculus. It was a lot of fun to work on that paper.