Bringing Formal Methods to the "Average Programmer"

by Benjamin Kaminski — 5 April 2024

Topics: Interview

Could you introduce yourself and your research area?

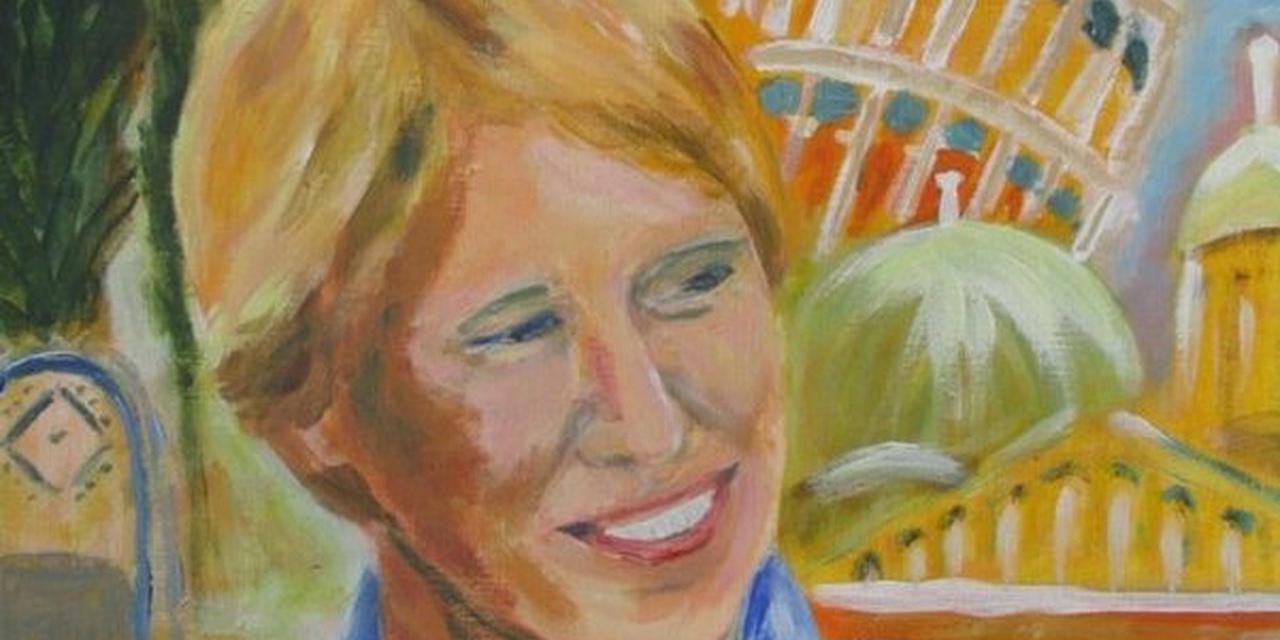

I’m a senior research at CNRS, which means I’m like a tenured professor that does not need to teach (but I do teach). I’m currently director of Verimag, a research center in formal methods, verification and related topics, jointly operated by CNRS and the University of Grenoble. I work in formal methods in the broad sense. I have worked on abstract interpretation and was one of the developers of the Astrée static analyzer. I have also worked, among other topics, on SMT-solving, and the analysis of cache properties. These days, I work mostly on formally verified compilation with optimization and security features.

What are the most exciting current advances in your research field?

I am intrigued by the applications of machine learning to program synthesis and verification. Obviously, machine learning by itself is not reliable. However, combinations of machine learning and strict checking, such as provided by a proof assistant or program verification framework, are reliable. Some interesting progress has been made on having a system learn how to do formal proofs in a certain field, but we are only at the beginning. Challenges are hard; for instance, machine learning thrives on large training sets, but we don’t have immense training sets of successful proofs.

What do you expect will be the next big thing/challenge in this field?

Since the advent of formal methods, there have been proponents of their general use in programming, and their opponents, citing their slowness, lack of scalability, and high costs. Today, formal methods have made great inroads in certain safety-critical industries (avionics, nuclear, trains…), and semi-formal methods have been successfully deployed by major computing companies (Microsoft, Facebook…). The challenge is, as it has been for the past 50 years or so: how do we bring these methods to the “average programmer”?

Do you have any ideas / vision on what would be necessary to achieve this?

Pascal Cuoq, chief scientist at TrustInSoft, explained to me their strategy for marketing their abstract interpreter. Initially, they demonstrate it just as an advanced testing tool that is guaranteed to flag any undefined behavior, a kind of super Valgrind or sanitizer: the analyzer operates on concrete execution traces and can be integrated into conventional test systems. Then, they point out that it is possible, at least for some input variables, to propagate sets of values instead of single values, in a way such that the guarantee of absence of undefined behavior applies to all possible input vectors thus described. Handling sets of values means that it also becomes possible to make allocation functions both succeed and fail, and to include both eventualities in the guarantee. They thus gradually introduce users to the notion of semantically sound static analysis, in a way that allows them to adjust the level of abstract interpretation that they want. This approach is much more likely to be successful, for most prospective users, than one introducing them at first to abstract concepts or one that forces them, from the start, to redesign their development and testing flow.

Meta (formerly Facebook) distributes the Infer static analyzer, which has a very pragmatic design. The tool can be run incrementally, so that developers can check if their commits produce new warnings; incorrectness logic allows the tool to produce traces leading to errors. The focus here is to help developers identify bugs when they are about to add them to the code, rather than to try to prove software correct.

What advice would you give to someone just starting their career in research?

See as many exciting things as you can before getting drowned in bureaucracy.

Finally, who would you like to see interviewed on the ETAPS blog?

Christine Paulin? Talia Ringer?

And which paper would you recommend everyone to read?

Difficult to say. For theory this one, for practicioners this one.