Software as Research Infrastructure

by Reiner Hähnle — 25 April 2023

Topics: Software, Infrastructure, Hardware

The Cost of Research Infrastructure

The supercomputer at my institution TU Darmstadt (Lichtenberg-Rechner) cost 15 M€ so far, needs several people and hundreds of thousands of euros per year to run it. On a smaller scale, an electron microscope might cost 1 M€ and requires a technician (usually not the scientists themselves) to use it effectively. There is no upper limit: the Large Hadron Collider cost over 10 B€ and has an annual running cost of several billion euros. The cost for the Webb space telescope is in the same order of magnitude.

Necessity and Sustainability of Research Infrastructure

Of course, not every piece of research infrastructure is as expensive as the examples mentioned above, there are modest Petri dishes as well. But this is beside the point, which is that nobody doubts the necessity of research infrastructure in the natural sciences, because it is physical. And this is independent of any degree of applicability, the mere drive to understand the universe we live in is enough to justify vast investments.

What is more: it is broadly understood that it is not enough to buy an electron microscope, to build a collidor, to launch a space telescope: it is necessary to sustain these efforts by employing technicians and engineers to maintain these machines so that scientists may continue using them.

Finally, while scientists certainly played a major role in the design of the LHC or the Webb telescope, nobody would expect a biologist to repair an electron microscope, a phycisist to lay cables in the LHC, or an astronomer to launch the rocket that carried the Webb telescope into space. This is the task of specialists, and rightly so: it is obvious that it would not only be a waste of time to engage scientists for such tasks, but the result would be far from optimal.

Research Software: A Different World

Not so in the strange world of research software, that is, software constructed as an aide to perform scientific experiments. In the digital realm, the bulk of research software is not built by professional programmers and software engineers, but by the very scientists who use it.

I am unaware of any grant schema that has “software construction” as a substantial cost category (only minor expenditures for off-the-shelf packages), let alone “software maintenance”. If research software is required for a scientific project, it is assumed that it is created either in unaccounted time or as part of scientific work packages. I argue that neither option is effective or sustainable.

Research Software: It Doesn’t Scale

Most people outside Computer Science (CS) research (and many insiders, too) are not fully aware of what is at stake. Let me explain what I mean by way of a research project I have been involved with: Since the late 1990s we develop and maintain the deductive verification system KeY, a well known formal verification tool for the Java programming language. For example, it was used to detect (and fix) serious bugs in the Java API. Over 20 PhD theses relied on KeY to perform experiments, more than 10 other research projects used KeY in some way, it is regularly used in dozens of undergraduate and graduate courses all over the world. Quite a few papers were written about KeY and many of the research ideas expressed in papers dealing with KeY have been taken up by others.

I use KeY as an example, simply because I know it well, but similar (and even more impressive) accounts can be given for other long-lived research software projects, such as Coq, CPAChecker, CVC5, Isabelle, KIV, KLEE, PVS, Vampire, or Z3 to name just a few from my area that come to mind.

But systems like KeY exist against the odds! Here’s why:

Over the years, about 15 M€ of public funding went into the development of KeY, via state-funded positions of senior scientists and PhD students, via DFG-funded projects, EU projects, etc. This is, by the way, comparable to the Lichtenberg-Rechner mentioned in the intro. However, in contrast to the Lichtenberg-Rechner, until recently the amount of funding specifically earmarked for sustaining KeY as a research tool was:

0 €

Any effort for maintenance, documentation, improving usability had to be either hidden or performed in unpaid time by selfless contributors.

Obviously, this situation is not sustainable.

Software as a Research Infrastructure

Research software is for the Computer Scientist (and, increasingly, for other scientists, too) what a particle accelerator is for the Physicist and the space telescope for the Astronomer.

Inside CS research, and increasingly outside of it, software as a research tool is nearly ubiquitous: The majority of CS PhD theses nowadays contain experimental aspects and this means, in most cases, that it is mandatory to create software. Computer Scientists receive training in programming and software engineering, so to a limited extent, they are capable of producing the software tools they need. But as argued above, this does not hold for larger projects and it is generally not sustainable beyond the span of a project or PhD tenure. And outside CS the situation is much worse. The quality of software produced by natural scientists who are forced to build it is, well, I guess it is comparable to what I might achieve with an electron microscope.

Risking the Investment

The discussion so far can be roughly summarized as follows. On one hand …

- Research software accumulates considerable investment

- Research software often exists for a long time, the more successful it is, the longer

- It is in the public interest to safeguard long-term investment

- Research software is as important (and getting more so) for the scientific enterprise as physical infrastructure

At the same time, the sustained availability of research software and to construct it professionally in the first place, involves a considerable amount of non-scientific work:

- Requirements elicitation, architecture, coding, …

- Maintenance: bug fixes, outdated libraries, adaptation to new technology

- Documentation, provision of APIs

Scientists, even Computer Scientists are not necessarily the best choice for building and maintaining software, even if they were actually paid for it.

Unfortunately, the current landscape of research funding, specifically in CS, is completely inadequate to deal with this situation, which leads me to the conclusion:

The investment into many software-centered science projects is in grave danger of being lost in the midterm.

A Glimmer of Hope

But I don’t want to end my very first ETAPS blog with a depressing message, so let me mention two examples raising hope that the perception of research software might (slowly) be changing:

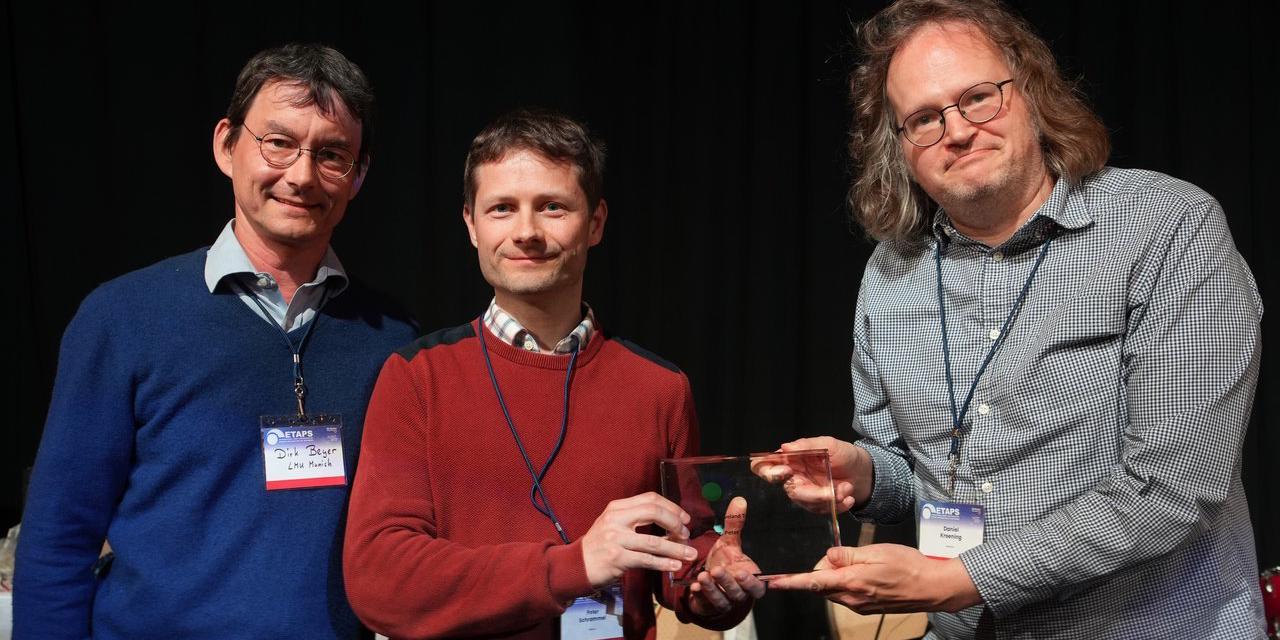

In 2021, the KeY project was among the first recipients of a DFG grant in the category “Quality assurance of research software through sustainable usability”, which addresses many of the issues discussed above. This grant was open again in 2022 and, hopefully, it will become a fixture. While this does not yet address the need to employ permanent, professional personnel (we obtained two PhD students limited to three years), it is a start. Hopefully, other agencies will follow.

My second example concerns the winner of the 2021 ACM Turing Award, Jack Dongarra. The award was presented to him for developing and maintaining the most important linear algebra package in scientific computing for more than 40 years, all the time adapting it to the latest generation of hardware architecture. That the most important prize in CS was awarded for a longterm effort in software development means that the scientific value of such an activity is prominently recognized. Now, only funding agencies need to follow.

A Plea for Help

Let me close with a plea for help: I know many ETAPS members enjoy excellent scientific standing and recognition beyond their area of specialization. Many of you are in scientific advisory boards of funding agencies, universities, and professional societies.

If you have the opportunity, and it is appropriate, please bring up the challenge of creating software as a sustainable research infrastructure, the current lack of means to do so, and the potentially lost opportunities and investment.

And finally, here is a grand challenge: when the public invests billions in physical research infrastructure, at least there are nice pictures, such as this or this. In CS we completely lack meaningful visual metaphors for software. For example, if you type “software” into Google’s image search, what you get is downright depressing: various keyboards and screens with unreadable code on them, UML diagrams, mindmaps, … Is there really nothing better? Let me know!

Reiner Hähnle is Full Professor of Computer Science since 2002, now at TU Darmstadt, until 2011 at Chalmers University in Gothenburg. His research interests are wide and include deductive verification, programming language semantics, software product lines, language-based security, active object languages, and philosophy of (computer) science. Reiner wrote his first lines of research software in 1986 (in Lisp, on a Siemens mainframe) and was involved in numerous projects since. These days he is best known for his contributions to the verification tool KeY and the active object language ABS. Reiner is convinced that research software is essential to validate new concepts and theories in the software sciences. Some notable assignments include being President of Tableaux, Founding Trustee of FloC, the first Wine Chair of an international conference, member of the Scientific Directorate of Dagstuhl and, since ETAPS 2023, Chair of the Steering Committee of FASE.

Reiner Hähnle is Full Professor of Computer Science since 2002, now at TU Darmstadt, until 2011 at Chalmers University in Gothenburg. His research interests are wide and include deductive verification, programming language semantics, software product lines, language-based security, active object languages, and philosophy of (computer) science. Reiner wrote his first lines of research software in 1986 (in Lisp, on a Siemens mainframe) and was involved in numerous projects since. These days he is best known for his contributions to the verification tool KeY and the active object language ABS. Reiner is convinced that research software is essential to validate new concepts and theories in the software sciences. Some notable assignments include being President of Tableaux, Founding Trustee of FloC, the first Wine Chair of an international conference, member of the Scientific Directorate of Dagstuhl and, since ETAPS 2023, Chair of the Steering Committee of FASE.