A Glimpse Into Performance Engineering

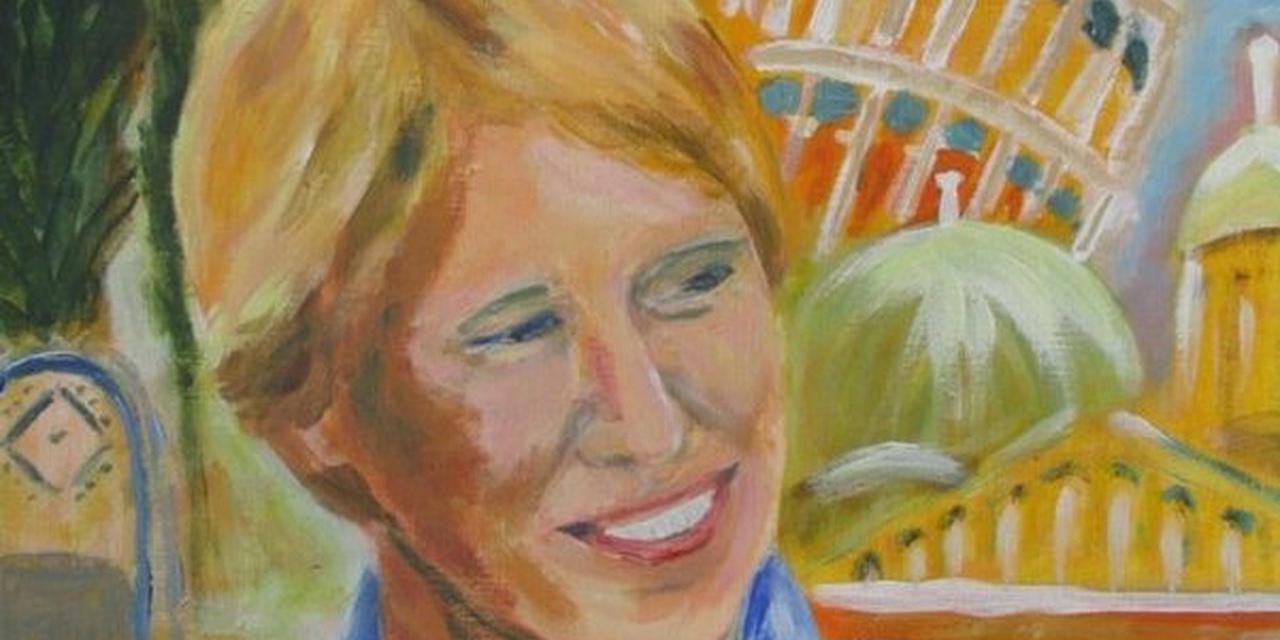

by Georgiana Caltais — 17 April 2023

Topics: Interview

Could you introduce yourself and your research area?

My name is Ana-Lucia Varbanescu and I work in performance engineering. I did my undergraduate and MSc studies at the POLITECHNICA University of Bucharest, Romania. After I worked for a couple of years as lecturer at the same university, I decided to start a PhD trajectory at Delft University of Technology, the Netherlands. I worked in the Parallel and Distributed Systems group, focusing on the emerging field of heterogeneous computing—first for embedded systems, in a project together with Philips and VU Amsterdam, and then for high-performance computing. I worked with different platforms, including SpaceCAKE from Philips, Cell/B.E. from IBM—the first heterogeneous multi-core system to be used for general-purpose computing, and GPUs from NVIDIA—the first versions of programmable GPUs using CUDA. My focus was on programmability and performance analysis and modelling. Later, I learned these operations are grouped in the field of Performance Engineering. After the graduation, I worked as a PostDoc researcher at Delft University of Technology and VU University of Amsterdam, working on high-performance computing for astronomy. In 2013, I started a tenure track position at University of Amsterdam, on a MacGillavry fellowship. I have also completed my Veni research at UvA. I was tenured in 2018, and I am still working one day per week at UvA. From 2022, I work at University of Twente, where I aim to extend my research in performance engineering towards zero-waste computing and system-level co-design.

So what is Performance Engineering? It is the research field that provides methods and tools to improve application performance. To this end, we provide methods and tools to reason quantitatively about the performance of applications and systems, from evaluation to analysis, modelling, and prediction. We also provide code transformation recipes to improve the performance. Finally, we provide mechanisms for scenario analysis, where performance can be inferred for platforms and systems not yet available.

What is the impact of performance engineering on software engineering?

I believe performance engineering is an enabler for all of us to build better applications and systems: faster, more effective, more efficient, and more sustainable. Therefore, I believe performance engineering is an enabler for software engineering to better integrate non-functional requirements in their processes. In turn, software engineering can contribute to better performance engineering by clear semantics and execution models, which can be better/easier modelled with high accuracy.

Ultimately, I am convinced these two “engineering” sciences will complement each other: if we aim to build efficient systems and high-efficiency/high-performance applications by design, we will rely on effective software engineering tools to build the design space, and effective performance engineering methods and tools to explore it.

What are the most exciting current advances in your field?

Performance Engineering is a very dynamic field: as the systems and applications evolve towards more complexity and more heterogeneity, methods and tools need to evolve, too. Performance metrics evolve, as well, from the usual wall-clock time (or time to solution) to scalability, efficiency, or energy efficiency metrics. As such, three main developments are having a significant impact on Performance Engineering, bringing along challenges and opportunities: effective heterogeneous computing, advances in machine learning, and system co-design.

I loosely define heterogeneous computing as the collaborative use of different types of computing resources to solve a given problem/application. The main challenge of heterogeneous computing is to embrace its own diversity: how can we use all resources effectively and efficiently to provide high performance for a given application? Due to this challenge, heterogeneous computing remains difficult for system designers, application developers, and performance engineers alike. However, with portable programming models, and modular application design, model-based application design for heterogeneous computing has been successful in exploiting such systems. However, more work is needed to automate these processes and, eventually, allow users to ignore the underlying heterogeneity and build truly portable, “write once, run anywhere” applications. Take, for example, machine-learning platforms like pyTorch or TensorFlow, or linear algebra libraries like BLAS: they can all use the underlying resources of the system without having users worry whether they execute on CPUs, GPUs, or IPUs (Intelligent Processing Units).

The second topic I want to mention is machine learning (ML): this is an external factor that has boosted performance engineering from modelling to performance enhancement. First, statistical, ML-based models have become easier to build and calibrate, due to the adoption of high-productivity tools for such models. Combined with improved ways to collect performance data, we can now automatically build accurate statistical performance models. Their interpretability, however, remains an important, actively researched topic. ML also helps nowadays with the search of better algorithms or more targeted code transformations for better performance and/or efficiency—effectively enabling application-specific optimizations.

Last, but definitely not least, system co-design is an exciting use of performance engineering methods and tools for building efficient, application-tailored computing systems. The core idea is that, by combining user requirements with application models, we can configure a target computing system for a given application such that we achieve perfect efficiency and zero-waste. Revisiting our earlier example, on machine learning platforms using the system at hand: current solutions use all the resources they have available, and often waste computing cycles of one resource or another at any time; instead, co-design helps determine the configuration where only the needed resources are used at maximum efficiency. System co-design often combines performance modelling with performance analysis and clever design exploration, and it is only as good as the modelling is accurate. With the current energy crisis in particular, and raised ICT-sustainability concerns in general, zero-waste computing is a research direction that requires rapid progress.

What advice would you give to someone just starting out in this field?

Performance engineering is often like puzzle solving, and many of our students often prefer to fiddle with the code and make it work faster, than to reason about the how and the why. I would probably recommend those who want to dive into performance engineering to focus on the methodological aspects. How can we create better models (and how can we use ML more effectively in our field)? How can we automate the interaction with users, to collect requirements that are easy-to-use for driving an optimization process and to provide insights that are understandable? How can we use performance engineering in systems and application development at design time? How can we address the ICT sustainability challenge together with application owners and users?

Which paper in your field would you recommend everyone to read?

I would find it difficult to choose, but I believe this Computer article offers a good technical overview of the systems co-design approach: K. Barker, et al., “Using Performance Modeling to Design Large-Scale Systems” in Computer 42(11), 2009.

Can you tell us a bit about your most recently acquired grant?

I am proud to be the PI from UTwente in a large EU project, where we study sustainable large-scale graph processing. Graph-Massivizer will, by the end of 2025, provide an integrated platform for massive graph processing spanning the computing continuum (devices, edge, and cloud) in terms of infrastructure, and graph generation, analytics, and evolution in terms of applications. The project features four exciting case-studies: we will demonstrate the use of this technology for event forecasting, data-center operations, industrial process optimizations, and financial data generation and analysis. The project has 11 partners, from Austria, Germany, Italy, The Netherlands, Norway, Spain, and Slovenia.

I am also working, together with my colleagues from UvA, on a much smaller, yet very interesting project: EnergyLabels for digital services. Our research focuses on quantifying the energy consumption of digital services running on the entire compute-continuum, and designing a methodology to assign them energy labels, much like those of ordinary appliances. Our hope is that, once such labels exist, users of digital services will actively choose for more efficient solutions, even at the cost of speed or convenience. In turn, this can reduce the ICT energy footprint significantly.

This year you will give an invited tutorial at ETAPS. What do you hope attendees take away from your presentation?

My tutorial will focus on heterogeneous computing, which I will use to demonstrate the capabilities of performance engineering, as well as its challenges. I will also introduce the links with computer architecture and software engineering, and advocate the use of co-design techniques for heterogeneous systems towards zero-waste computing. My take-home message is that we are responsible to build software that is correct and maintainable, but also efficient and sustainable, and that heterogeneous computing is, when done right, a path that can provide this outcome. And with the right software and performance engineering methods and tools, we will have such applications running on efficient systems, with performance guarantees.

Finally, who would you like to see interviewed on the ETAPS blog?

I would like to know more about the software and performance engineering practices in big companies. I would love to hear more about the entire process behind libraries from companies like Intel, or IBM, or NVIDIA, whose core business started elsewhere, but are now offering integrated systems, from hardware to high-level software engineering tools, that run our world. I would be very curious how specialized their processes are.